Why choose Panoptica?

Four reasons you need the industry’s leading cloud-native security solution.

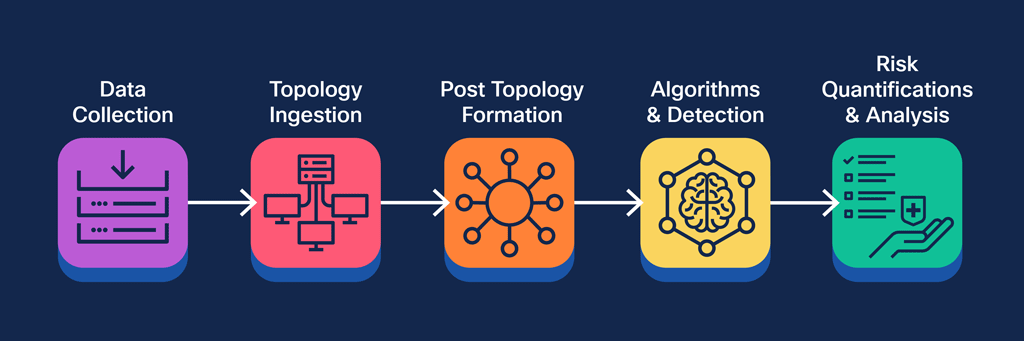

In this article, we will break down the complex orchestration required to form a cloud environment graph and utilize it for detection and prioritization of attack paths. One of the most valuable security outputs that cloud owners can unlock through this methodology are the contextual-based insights about underlying threats evolving from inside and outside of their cloud environment.

As the cloud graph workflow is made of loosely coupled, collaborating services, it uses a microservice architecture, with each microservice having a main task to complete. Communication between these functions is achieved with the help of small middleware agents that work as message brokers, allowing outputs of one microservice to become the input of the other. This software architecture allows for dynamic development with minimal dependencies between separate parts of logical functions, as well as the ability for easier debugging and improved scaling possibilities.

Figure 1: Representation of end-to-end graph-based attack path microservices architecture

The graph input flow relies first and foremost on the collectors microservice. The collectors are independent from the graph, and their job is to collect the data of the cloud assets lying in the investigated environment. They send a high number of requests to the API servers of the cloud vendor, that in return responds with metadata about each object. For high performance, the graph must adapt itself and be capable of digesting incoming data in any order. Think of creating a relationship between a node in the graph to a node that has not yet been digested into it – a placeholder node must be created with the minimal details that are sufficient to characterize it – its ID, entity type, environment details, and so on.

The graph requires a strict data model for it to convert raw data to live and linked topology. A graph data model defines for each data object type received from the collectors if it is relevant for mapping, and if so, sets a graph object to represent it (a node or an edge). It also defines what key value properties the graph object would keep, what links it would be involved in (on both the sending and receiving end), and more.

Every object in the graph needs to be represented in the same uniform structure so it can be easily and efficiently digested and queried. For example, a node in the graph must hold its own Global Graph ID (GGID), data collection source, first and last time seen in the graph, the environment in which it lives, as well as many additional type-specific keys it may have. Only by defining a data model can the graph be utilized for security research purposes and be used to gain insights from. Without it, there is not much that can be done in the graph.

The nodes defined in the data model can be categorized into different contexts, as discussed in previous blogs: Networking, Compute, Identity & Security, Database, Storage, Analytics, Management & Governance, Machine Learning, and more.

From a performance point of view, this phase is critical because it has a direct impact on the overall performance of the entire graph microservice workflow. We want our topology service to be able to digest large amounts of data objects from multiple sources and vendors and convert it to graph objects concurrently, efficiently, reliably, and consistently. For that purpose, we need to work with a graph database that uses an ACID consistency model. ACID stands for Atomic, Consistent, Isolated, Durable. In short, the ACID model means that the database is consistent, that its received transactions are either executed as a whole or not executed at all (no partial execution), and that transactions do not contend with each other. The number one graph database today is Neo4j, and fortunately, it uses the ACID model. An efficient implementation of such microservices with the corresponding server specs and database configurations should get you to tens of thousands of writes per second, which is usually enough for common practices.

Another point worth mentioning is that the cloud graph is compatible with all major cloud vendors (AWS, Google Cloud, Microsoft Azure) and orchestration platforms (i.e., Kubernetes). Meaning, not only does it build mini-graphs and create connections between objects from the same collection session (same account of the same vendor), but also between different accounts and even different cloud vendors. For example, a Kubernetes Service that is exposed by an AWS Load Balancer will show up in the graph as a direct link between these two independently digested objects. This is where the magic in the graph happens, or where we can gain many insights that are highly difficult to obtain in any other way.

Despite the advantages of the topology ingestion microservice and its highly dynamic and scalable capabilities, it is not sufficient for building the complete structural picture of the cloud environment alone. This is because not all the data received in this stage can be translated immediately to its respected topological implications (i.e., creation of nodes and links).

For example, think of aggregative links - links that attach one node to a bunch of other nodes. Let’s say a specific permission has been given to all the users in the account (not a great practice security-wise, yet a very popular one). Since incoming data order is arbitrary, it is possible (and even likely) to digest information about this permission before digesting information about all the users that exist in the account.

Since we wish to cut time dependencies and digest data “on the fly”, we have basically two options. The first is to create an “All Users” node, and attach every user created to it. This is not optimal due to a couple of reasons – firstly, it pollutes the data model (“All Users” is not a true cloud entity, but rather an aggregation of such). Secondly, it is non-performant (the number of edges created is doubled). The better idea would be to have a complementary post formation microservice, that in a single write query, links this permission to all the user nodes it acquired in the previous phase.

This microservice is responsible for the final touches of the cloud graph topology building process, and only upon its completion do we have the cloud environment represented in the most precise way possible. This effort will pay off in the later stages of the flow, as the attack path and visibility capabilities will rely on the exact topology built during this stage.

After ingesting and forming the topological structure of the environment, we now have a cloud graph in our hands. The next challenge that stands is gleaning insights from it by using the different resources we mapped to the graph, interweaved with the connections between them.

The insights we are looking to find are called Attack Paths.

In short, an attack path is the ongoing flow that occurs during exploitation of attack vectors by either an internal or external attacker, represented visually as a path from the same graph topology we built in the first phase. It is used to better understand attacks, ascertain where the attacker came from, which asset they can gain control of via this specific access (aka the main asset), and what privileges they can obtain by accessing the asset. The path usually concludes with the resources or actions that the attacker granted to themself by executing the attack.

We can divide the detection phase into two main steps. First, identifying potential main assets in the graph, and second, determining for every such asset (node) if it is indeed a main asset of an attack path.

The first step can be taken in two different methods. The first is to define a pattern-based mechanism, with each pattern specifying an attack scenario evolving around a main asset. For example, an EC2 Instance exposed to the internet leading to administrator access compromise. All these sub-scenarios fork into a multi-branch path, depicting several options for the attacker to pursue, with some imposing a more severe threat than others (more about branch and path prioritization in the next phase).

The second, more sophisticated method to achieve this goal is by using graph theory algorithms and machine learning models to identify these entity types. Some useful concepts in this context are graph neural networks (GNNs), graph algorithms such as shortest path, and node centrality metrics like degree, closeness, betweenness, and eigen centrality – all can come in handy for determining the importance of the node and its probability to be a main asset of an attack path (or in other words, be the center of an attack scenario).

These two methods have their trade-offs. The first is relatively easy to control and monitor because it is schematically defined, but time-consuming to maintain (a new main asset type requires a new graph pattern). The second method takes far more time to implement and train but is likely to expand the variety of scenarios detected and help reveal new insights.

When designed correctly, both methods can detect a variety of attack paths from almost every cloud environment graph.

After defining a data model, building the topology through the model, completing it from all angles, and detecting all existing attack paths in the account – the next challenge is to prioritize them by risk severity.

Hundreds of detected attack paths are meaningless unless they can be grouped, categorized, and prioritized. Only in this way can a cloud owner go over these graph insights efficiently and start to resolve them downwards in the severity chain.

Every attack path detected in the previous stage gets sent to the risk analysis microservice. This microservice oversees the enrichment of the path with extra details to help better understand the attack scenario, prioritize the attack path among others detected, and eventually remediate the risk it imposes on the cloud environment.

First, it asks every path it gets a series of questions (queries) called Risk Definitions. These questions are usually very zoomed-in and focused in specific areas of the attack path, what makes them light and very efficient (only read, no writes). The risk definitions go all over the board – they ask about the ease of an attacker to achieve network access, sensitivity of the end target node, workload configuration issues, permission wildcards, cross account risks, neglected assets in the path, exposed credentials, and a lot more. They are supposed to help characterize the severity levels imposed by the different aspects of the path (network exposure, identity, workload hygiene, malware, CVEs, etc.). Each path aspect is called a Risk Star, and so mathematically every answer to a risk definition affects the score of the star. After some number crunching between the different risk stars, a final numeric risk score is given to the path. The risk definitions are also a key for prioritization inside the path itself – in case of a multi-branch path, they help highlight the most severe sub-path to keep the focus on the main risk of the path clear.

Figure 2: An attack path showing an attacker achieving access to a Kubernetes replica set and using the risky cluster role attached to it to gain administrative privileges in the cluster

As part of the enrichment, each attack path is generated with three texts - risk name (concise path implication used as its title), risk cause (reason for path detection), and risk impact (potential result of the risk). The text generation mostly relies on the risk definitions described earlier. These generated texts allow for full contextual understanding of the presented attack scenario and its importance.

After calculating the risk score and deriving the severity of the attack path, we need a more granular way of grouping and categorizing the data, since severity alone is insufficient. For example, think of a high-risk account with various detected attack paths, all in critical severity – without the right grouping, the cloud owner will suffer from alert fatigue, not knowing where to prioritize their efforts, and most likely won’t fix anything.

For that purpose, we need to chain a key that on the one hand will group the paths more granularly, but on the other keep generalization, so a group will contain more than one path in most cases. A successful choice typically would be to chain the path severity together with the main asset type and the risk name – these parameters are usually sufficient for distilling the true meaning of the attack path. That way paths holding the same key are essentially the same (the only difference is that they’re concerning a different main asset), so we would likely want to see them grouped to help us prioritize. Another useful property that assists in dealing with a large number of detections is the attack path category, which gives a wider frame for filtering and prioritizing between attack paths. Paths concerning the same general risk will have the same category, and a single path can be labeled with multiple categories if it includes several causes of risk. Common categories vary between Administrator Access Compromise, Vulnerable Public Workload, Privilege Escalation, Neglected Resources, and many more.

After putting all the effort in formation, detection, and prioritization – our final goal in the risk analysis phase is to help the cloud owner mitigate the security risks detected in the attack paths.

There are two main types of attack path remediations. The simpler one is a verbal description of the problem and how to fix it – for example, by altering a specific configuration, limiting internet access to an asset, creating new security keys, and so on.

The more advanced type of remediation, resembled also in the form of a visual path, concentrates on paths where identity issues cause the risk. The remediation is given by deny guardrails, which are dynamic policies generated by the Panoptica platform to reduce the risks discovered. The guardrails do not change existing policies but rather create a new one to be attached side by side to the existing roles, users, or groups. The guardrails eliminate the attack vectors found, such as privilege escalation, by denying the risky actions only for specific resources in the customer’s environment that can be leveraged by an attacker. (i.e., denying passing the admin role to new EC2 instances).

The generated Terraform with the deny guardrail can be used to eliminate the risk and resolve the attack path.

It is important to mention the vast collaboration that exists between the Graph Algorithm Team and the Cloud Security Research team that enables the graph to provide its deep security value.

The researchers from the Cloud Security Research team are responsible for deep diving into and analyzing security vulnerabilities and weak spots in various cloud and Kubernetes services. The team’s research is spurred by interest in particular services or vulnerabilities, often prompted by issues seen frequently across customers’ environments, or prompted by market trends. They provide the graph team with all forms of enrichment to the graph topology – specific edges and nodes that cannot be derived from collectors, labels indicating severity and permissiveness of a specific role or roles, and additional properties on nodes or edges that give added value from a security perspective. The relationship between these two teams is symbiotic. The research team provides input to the graph for improved topology and detection, but also uses the graph for investigations and extended research endeavors.

To give a quick example, let’s look at an AWS access key that lays in cleartext as the value of an environment variable in a Lambda function. To give general information, an AWS access key is a 20-characters string containing uppercase letters and numbers that starts with a fixed 4-letter string (AKIA). That way it can be identified by a simple regex pattern via an analyzer that scans the function code. Having the combination of a user access key and a secret by an attacker can allow it to login to AWS as that user and commit actions in the account that can go as far as the user’s permissions do.

Figure 3: An attack path showing an attacker finding an AWS access key in a Lambda function and using it to login to the key's matching user granting them administrator privileges in the account

Now back to the graph - by the collector phase alone, the graph will create two disconnected nodes, one for the access key and the second for the Lambda function, as they both are considered graph entities by the graph data model. It is only by the analysis of the secrets analyzer, defined by the research team, that finds the access key exposed in cleartext inside the Lambda function code. The analyzer delivers this finding to the graph by a message broker, and the graph on its end translates this data into an edge from the access key to the Lambda function with the edge type of “FOUND_IN” (namely, the access key is found in the Lambda function).

This kind of data inference happens constantly in the graph and goes through deciphering plenty of research analyzers, patterns, and security findings to new graph topology. This leads to the graph being filled with all the data it needs at the end of a scan to detect a variety of attack paths and scenarios. It can be said that without the Cloud Security Research Team the graph would be mainly a visibility tool displaying the topology of the cloud account without added security wisdom, and without the Graph Algorithm Team the Cloud Security Research Team would not have a way to translate its findings to a data engine that produces contextual value from these findings - detection of attack paths, prioritization, severity and risk scoring, and all of the other advanced capabilities of the graph described in this blog post.

To conclude, we showed a cloud environment graph pipeline and broke it down to its microservices and pipeline flow. Many factors are to be considered when dealing with graph-based architecture in the context of cloud security – designing the topology data model, choosing and configuring a graph database, detecting paths efficiently, and performing at a high scale. By implementing and using such architectures, the immense power of the graph can come to full utilization and a variety of underlying attack paths and security insights can be gained, Mean Time to Response (MTTR) can be improved and more – all leading to the goal of a safer and risk-free cloud environment. Schedule a demo today to see for yourself.