Why choose Panoptica?

Four reasons you need the industry’s leading cloud-native security solution.

In our first two entries in this series, we explored installing Microsoft Defender for Endpoint (MDE) XDR agents on AWS EC2 instances, using manual scripts and AWS EC2 Image Builder, respectively. In this penultimate entry in the series, we will experiment with installing MDE onto Amazon Elastic Kubernetes Service (EKS) Nodes.

MDE on EKS on its own is not very impressive, as EKS Nodes are simply EC2 instances that are onboarded into your Cluster – you could accomplish that using User Data scripts or other means. In this blog the Panoptica Office of the CISO is happy to announce that we are releasing an internal tool we use to harden, bootstrap, and manage our own EKS Clusters which we will use for this blog so you can easily follow along!

Before moving too far ahead, let us quickly level set on the wonderful world of containers, Kubernetes, and AWS’ managed version: EKS. This will not be a robust deep dive nor do the subjects much justice, refer instead to this hyperlink for more information on containers from Docker and this hyperlink for more details about Kubernetes penned by the Kubernetes project. Feel free to skip ahead of this section if you only care about the new tool and technical content of this blog post.

Containers are small units of software which ideally contain the minimum necessary dependencies to run specific applications or services. In theory, when properly built with the true minimum necessary components, containers have the following advantages over running software on a traditional server with a full operating system:

At scale, however, running containers can become problematic due to requirements such as networking, logical isolation, and resource management, among other issues. To solve these problems, companies started to look towards creating management constructs to help alleviate that operational burden. The added benefit to these platforms, such as Google’s Borg - a spiritual progenitor to Kubernetes - were increased reliability, ease of adoption, and complex container-based deployments that lower the development curve.

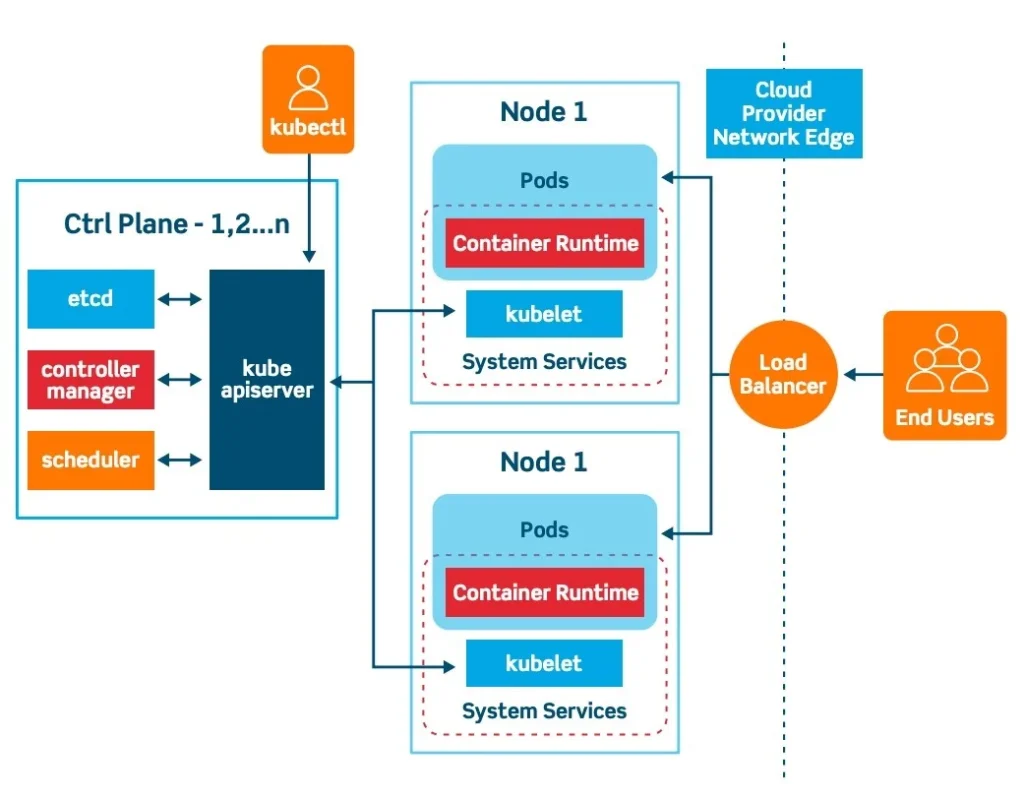

Like Borg, Kubernetes is a cluster management platform that is used to orchestrate containers. An individual deployment of Kubernetes is called a cluster which is run on nodes which are traditional servers such as Amazon EC2 from which the required resources for the cluster are consumed. There are two classifications of nodes, the master node (also called the control plane) which contains the “brain” of Kubernetes for tasks such as resource management, API management, network management, and more.

These elements have specific names such as kube-controller-manager, kube-scheduler, kube-apiserver, and they communicate with Kubelets which are deployed on the other nodes – called worker nodes. On all the worker nodes, there is at least one pod which can run one or more containers. The pods consume resources from the worker nodes and provide them to containers based on needs or specifications. The below diagram (FIG. 1) from Platform9 helps capture many of these concepts.

On top of the orchestration and resource management capabilities, Kubernetes helps with other issues such as cleaning up corrupt or otherwise “dead” containers, it brings increased logical separation for multi-project/multi-team environments with the usage of namespaces, helps achieve rolling updates and coordinated version upgrades such as with A/B testing or Blue/Green deployments, manages specific network access via ingress controllers, and more. The industry around Kubernetes quickly rose to the occasion to provide further network and security capabilities such as network meshes, mutual TLS, Policy-as-Code engines, runtime security protection, cluster vulnerability management, and even Static Application Security Testing (SAST) tools, to run against Kubernetes configuration files.

Figure 1 - Kubernetes logical diagram, by Platform9

As with many management abstractions within information technology, there are always drawbacks and inherent risks, and Kubernetes is no different. Taking control of the Kubernetes control plane for instance would be catastrophic as adversaries could now control any other node and all of their pods and containers within the cluster – whether it was for extracting sensitive data or using the infrastructure for malicious purposes such as cryptocurrency “mining” or as part of a botnet. In some cases, adversaries do not even need to take control of the master node, they can use various weaknesses and vulnerabilities to take control of individual containers and “escape” from them onto the host nodes and conduct attacks from there. In extreme cases, adversaries can go as far as installing their own Kubelet onto Nodes and absorbing them into their own Clusters for much more direct control akin to taking control of a master node.

The above is not close to an exhaustive list of attacks against Kubernetes and its components, but it is hardly surprising. Anytime companies adopt modern technologies whether it was VMWare server virtualization hypervisors, AWS or GCP Infrastructure-as-a-Service servers, containers, or Kubernetes, there will be adversaries at all levels from extremely lethal to opportunistic who will look for ways to exploit them. Companies’ margins may live and die by competitive advantages afforded by digital transformations as adversarial groups and organized crime may also live and die by being lethal against these new platforms, especially as cybercrime becomes increasingly corporatized and part of national security strategies.

This introduces us to why cloud providers got into Kubernetes in the first place and how their managed offerings are different. Like with anything on the public cloud, the benefits are pay-as-you-go Operational Expense (OpEx) models, lower costs of ownership due to cloud providers’ economies of scale, and the shifting of operational burdens due to the Shared Responsibility Model. When it comes to Kubernetes, all cloud providers will manage the master node (control plane) on your behalf, such as with Amazon EKS. Cloud providers will also install cloud-oriented components on the control plane to further protect access to the API server on Kubernetes and (typically) will move Authorization and Authentication to cloud native identity stores such as AWS IAM.

Amazon EKS, and other equivalents, lower the burden of cluster upgrades and (mostly) seamlessly marry up Kubernetes constructs with cloud-native infrastructure such as using AWS VPCs, AWS IAM, EC2 Security Groups, AWS’ managed Docker repository AWS Elastic Container Registry (ECR), EC2 for Nodes, Amazon Route53 for DNS, and some even offer advanced security protection such as Amazon GuardDuty’s Kubernetes Protection and AWS AppMesh. If a company has already bought into a specific cloud provider, it makes a lot of sense to move towards its managed offerings, especially in Kubernetes as it further reduces the operational strains from self-managing clusters. The Panoptica Office of the CISO and platform teams do run workloads of Amazon EKS, the Panoptica Security Research team also conducts considerable research and vulnerability disclosures as it is in our personal interest to ensure that the platform is as secure-by-default as possible.

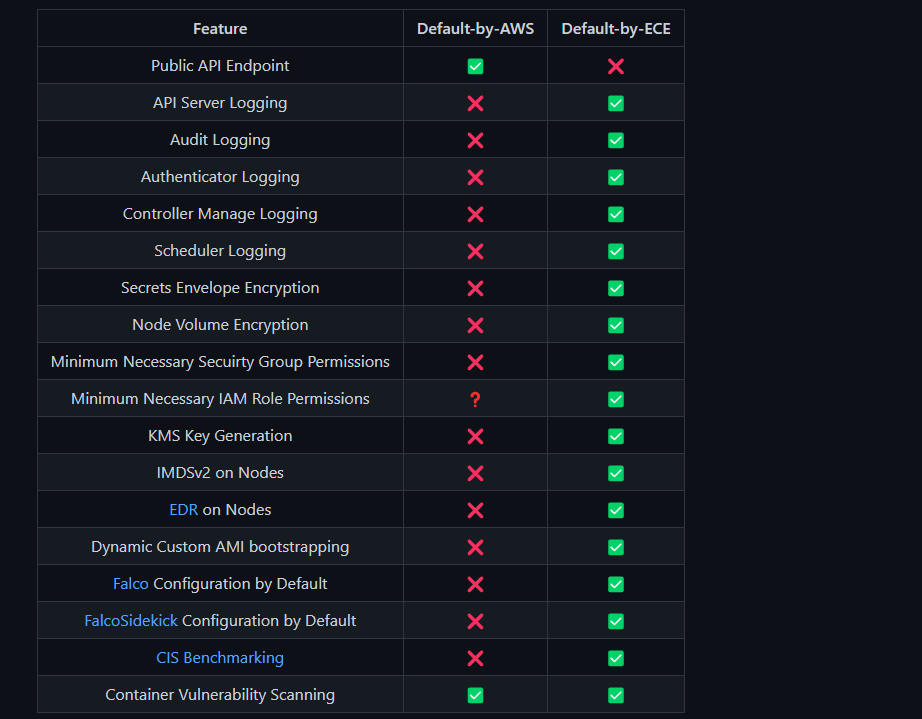

As mentioned in the introduction, the Panoptica Office of the CISO has open-sourced a tool previously used for internal deployment and secure configuration of EKS Clusters within the company. The EKS Creation Engine, or ECE for short, is a simple Python CLI program that automates the creation of a cluster throughout its entire lifecycle – every AWS service that is required to run containers on EKS is built with security mindedness and hardening in mind. ECE will build a far more secure cluster than AWS does themselves as shown from the (cheeky) chart in our GitHub repo (FIG. 2).

Figure 2 - ECE vs AWS Secure Defaults

ECE allows DevOps and other teams to unburden themselves from maintaining multiple Infrastructure as Code (IAC) templates and worrying about how to create a secure baseline to build from. The plugin modularity of ECE also extends beyond the (easily attainable) capability of IaC tools by providing dynamic and conditional bootstrapping of the Clusters and Nodes – such as installing Falco and FalcoSidekick for runtime protection and alerting or installing MDE on Nodes across different OS flavors and architectures. We will be doing a separate post on ECE and continuing to expand and maintain it, hope to see some of you out there opening Issues and Pull Requests as time goes on!

Important Note! Before moving forward ensure that you have the AWS CLI, Kubectl, and Helm installed and configured for your endpoint. For the best experience, you should consider using AWS Cloud9 IDEs with an IAM Instance Profile attached. The GitHub documentation provides steps to install Kubectl and Helm if you need assistance.

Using ECE is incredibly easy, and we will go through that right now. First, clone the repository and install the required dependencies. You should have at least Python 3.6 installed on your host system and Git tools

git clone https://github.com/lightspin-tech/eks-creation-engine

cd eks-creation-engine

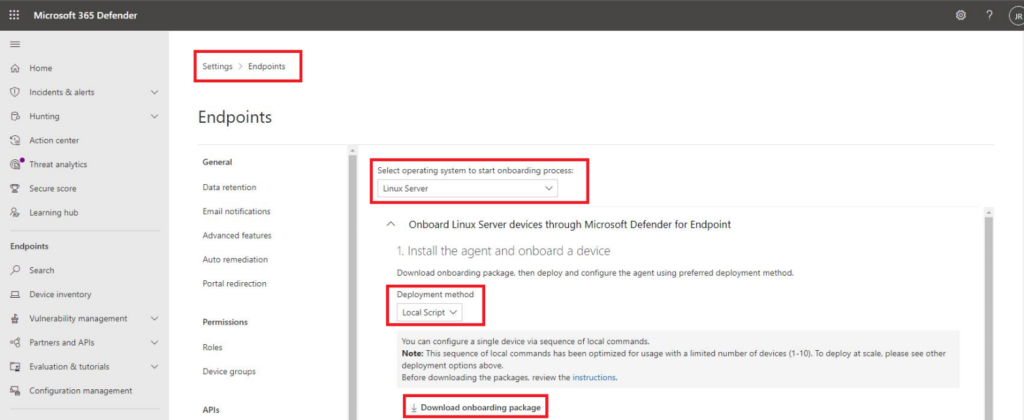

pip3 install -r requirements.txtBefore kicking off ECE, we need to ensure that our MDE configuration script is uploaded to S3 with a specific prefix. First, navigate to Endpoints Onboarding in the MDE Console and download a Linux onboarding script as shown below (FIG. 3). Ensure you do not unzip the file as the script generator within ECE will do that on your Nodes.

From the directory that you downloaded your onboarding script to use the AWS CLI, issue the following commands.

# Ensure you change the value of BUCKET_NAME to your actual bucket name

S3_BUCKET='BUCKET_NAME'

aws s3 cp ./WindowsDefenderATPOnboardingPackage.zip s3://$S3_BUCKET/mdatp/WindowsDefenderATPOnboardingPackage.zipNow we are ready to use ECE, ensure you change directories back to /eks-creation-engine if you have changed them. The following command is the absolute bare minimum to create a cluster with ECE, as arguments for variables such as your Cluster and EKS Nodegroup names are preconfigured, another example will be provided if you want to change these values.

# Ensure you replace the values for empty environment variables below

PRIVATE_SUBNET_ONE='fill_me_in'

PRIVATE_SUBNET_TWO='fill_me_in'

VPC_ID='fill_me_in'

python3 main.py \

--s3_bucket_name $S3_BUCKET \

--mde_on_nodes True \

--subnets $PRIVATE_SUBNET_ONE $PRIVATE_SUBNET_TWO \

--vpcid $VPC_IDThese are duplicate commands, with extra arguments supplied if desired. There are far more arguments that can be supplied but will be covered in the documentation and another blog post.

# Ensure you replace the values for empty environment variables below

PRIVATE_SUBNET_ONE='fill_me_in'

PRIVATE_SUBNET_TWO='fill_me_in'

VPC_ID='fill_me_in'

CLUSTER_NAME='fill_me_in'

NODEGROUP_NAME='fill_me_in'

python3 main.py \

--s3_bucket_name $S3_BUCKET \

--mde_on_nodes True \

--subnets $PRIVATE_SUBNET_ONE $PRIVATE_SUBNET_TWO \

--vpcid $VPC_ID \

--cluster_name $CLUSTER_NAME \

--nodegroup_name $NODEGROUP_NAME \

--ebs_volume_size 28 \

--node count 5Once the command is issued, it can take up to 25 minutes for your Cluster and Nodes to come online depending on the optional configurations that you enable and how many Nodes you wanted within your Nodegroup. By default, ECE will use the latest EKS-optimized AMD64 AMI for Ubuntu 20.04LTS that matches your specified Kubernetes version (1.21 is the default, as of the time of this post). Support for Amazon Linux 2 for both AMD64 and ARM64 is also provided.

While you await your new, hardened cluster to come online, let us review some of the steps that are happening under the covers. First, all required components are being created from scratch and tagged with the creation date and the ARN of your IAM Principal (session, Role, User, etc.) so you can track ownerships. ECE will create and add the absolute minimum required AWS-managed IAM Policies for your IAM Roles as well as the minimum necessary ports required for the Security Group which includes DNS, HTTPS, and the Kubelet port (TCP 10250) for communications with Kubectl. ECE also adds ports 8765 and 2801 for Falco and FalcoSidekick, respectively. By default, AWS will accept Security Groups you provide and will create a security group that allows all ingress for the Security Group itself, anything attached to the Security Group would have unfettered network communication capabilities.

When you provide an OS version and architecture, ECE is using those to lookup the latest AMI ID that matches the EKS-optimized versions for your Kubernetes version within AWS Systems Manager Parameter Store Public Parameters. By default, AWS EKS gives you the options to use Ubuntu or Amazon Linux, and any customization will require you to build your own Amazon EC2 Launch Templates and setup your own EKS bootstrapping scripts and Base64-encoded User Data. ECE automates the entire process and can differentiate User Data creation by the different operating systems and whether you want MDE installed. ECE will also ensure that the OS of your Nodes is fully updated before launching, to lower your initial “vulnerability debt.” All EC2 instances are also configured to use the Instance Metadata Service V2 (IMDSv2) which limits Server-side Request Forgery (SSRF) attacks and conforms to AWS best practices, a configuration they do not set by default either.

Lastly (for this example), ECE will also create an Amazon Key Management Service (KMS) Customer Managed Key which is used for EKS Secrets Envelope Encryption as well as full disk encryption for the EBS Volumes which underlies your Nodes’ EC2 Instances. By default, this is not done, and the burden to create the KMS keys and least-privilege policy would be on you to accomplish. When Kubernetes stores secret, they are only Base64-encoded, which is trivial to reverse. Using KMS along with a Key Policy that only trusts the Autoscaling Role, your IAM principal, the Cluster and Nodegroup Role drastically reduces the blast radius of credential exfiltration attacks. Additionally, encrypting your EBS Volumes can help you meet regulatory and compliance requirements as well as limit the vector of snapshotting and the sharing of your EBS volumes if attackers were to attempt that as well.

After completion, navigate to the Endpoints panel within Microsoft Defender 365 and search by the Group Tags using the Instance ID of the Nodegroup EC2 instances. To MDE, these are only regular instances, and will not be meaningfully different from any other AWS Linux EC2 Instance in your estate protected by MDE. You can use other automation such as checking which EC2 instances belong to an EKS Nodegroup and tagging the Devices in MDE with extra information to denote they are EKS-related for additional reporting enrichment.

The point of running an XDR agent like MDE on your EKS Nodes is for a further entrenched defense-in-depth strategy. A large motivation for adversaries is to “break out” of Container and Pods and onto the individual Nodes to launch further attacks. An XDR Agent can help detect and prevent TTPs such as lateral movement, downloading malicious packages, calling back to command and control infrastructure, and other OS-related attacks. ECE also provides the ability to install Datadog and Falco on your clusters for further enriched monitoring and threat detection capabilities.

Furthermore, the Panoptica Platform can integrate with your EKS clusters using Helm Charts as well as ingest the events from Falco directly to correlate findings with the rest of your environment. Using the Panoptica Platform with ECE can help speed up the development and protection of your EKS clusters.

The Panoptica Office of the CISO looks forward to expanding ECE and any reader contributions in the future. We will wrap up this MDE on AWS series with reporting automation and business intelligence reporting very soon, and kickoff several other projects we are excited about. Stay tuned, we’ll see you soon.

Stay Dangerous.