Why choose Panoptica?

Four reasons you need the industry’s leading cloud-native security solution.

In the first entry in this series, we explored what Endpoint Detection and Response (EDR) is, and why the Panoptica Office of the CISO uses it to secure our Amazon EC2 server estate. We previously provided scripts and a basic walkthrough of the installation and configuration of the Microsoft Defender for Endpoint (MDE) Linux sensors. In this entry, we will build on that by exploring Amazon EC2 Image Builder and teaching you how to automate the creation of images with MDE pre-installed on them.

Amazon EC2 Image Builder (EC2IB) is a managed service to automate the creation of Amazon Machine Images (AMIs) for Amazon EC2 instances and Docker container images. At Panoptica, we use EC2IB to create (or "bake") "golden" Images. A golden AMI can be thought of as a secure and properly configured baseline you use to create other AMIs or one you use right away. As mentioned in the previous entry, we use EC2 solely as a host for Kubernetes and Container orchestration - so we can use them as-is. What constitutes a "golden" AMI depends on your business and technical requirements but generally includes the latest Version of an Operating System (OS) that you use, complete with all the latest (at build time) updates, patches, and upgrades. It typically includes your absolute baseline requirements - software dependencies, middleware, required Agents, and other security configuration settings such as STIG or CIS hardening. Given that we use them for Kubernetes and containers, the apps will be bootstrapped after we provision the hosts. Still, other teams may act similarly, installing middleware and other components after they launch the Instance.

The reason to use EC2IB versus more "traditional" approaches including using Hashicorp's Packer or creating your own AMI baking pipelines using other AWS services such as EC2 APIs (for Snapshotting and Creating/Sharing AMIs), AWS Lambda, or AWS Systems Manager Automation, and others is to limit the number of moving parts and maintenance. Regardless of the simplicity, all scripts require some form of human capital for management, upgrades, bug fixes, and otherwise. EC2IB eliminates our requirement to maintain these "homebrewed" options, forgoes the reliance on third-party software (Packer), and adopts a more cloud-native and secure approach that abstracts all pieces into baking sharing AMIs on AWS.

For this blog entry, we won't be providing the AWS CloudFormation templates we use to build the required EC2 Image Builder dependencies such as Recipes and Components. Instead, we will use the CLI. Before getting too far ahead of ourselves, EC2 Image Builder contains the following concepts to understand the flow. A Pipeline refers to an end-to-end automation solution that will create a new AMI from a specific Recipe where that Recipe is a collection of various Components. Components are essentially mini-scripts that are provided by AWS, and you can author yourself, which provide various functionality and customization - from applying CIS hardening to installing specific software packages. At least one Component is needed for the Recipe. These are bundled with Infrastructure Configurations which instruct the EC2IB underlying infrastructure that the Recipe will be executed on and finally, Distribution Settings which instructs your Pipeline to place the final product in specified Regions and Accounts.

Distribution Settings do leave a lot to be desired, they're excellent for specifying Regions you want the Image present in, such as any active Regions for your Product as well as any "disaster recovery" Regions. However, Distribution Settings become incredibly verbose when being defined in the CLI or Infrastructure-as-Code (IaC) templates such as AWS CloudFormation. While sharing with specific AWS Accounts is also possible - it does not scale well for multiple Accounts as it does not yet (at the time of this writing) support AWS Organizations IDs. We will use further downstream API calls and automation with other AWS APIs to share these Organizations-wide.

We must first create a simple component that will perform basic upgrades and install the dependencies needed for MDE. In a production setting we'd have several Components which would perform CIS Hardening, install additional Agents (such as Application Performance Monitoring), install specific binaries, and so on - we will skip these steps and instead concentrate on MDE as well as the AWS Systems Manager (SSM) Agent, which is required for secure access via Session Manager and other operational automation (patching, configuration, compliance). Feel free to inject your own Components throughout the process.

Before creating the Components, we need to create a YAML document and upload it to an S3 Bucket we have access to. We will reference the URI of this S3 Object (the Component YAML) when passing the CLI command. Create a YAML file (such as mde_comp.yaml) and upload it to an S3 Bucket with the following command.

Note: Ensure you replace $MY_BUCKET with a name of an S3 Bucket to which you have access

cat <<EOF >mde_comp.yaml

name: InstallMDELinux

description: Installs MDE and Dependencies on Ubuntu

schemaVersion: 1.0

phases:

- name: build

steps:

- name: InstallDependencies

action: ExecuteBash

inputs:

commands:

- apt update

- apt upgrade -y

- apt install -y curl python3-pip libplist-utils gpg apt-transport-https zip unzip

- pip3 install --upgrade awscli

- pip3 install --upgrade boto3

- pip3 install --upgrade pip

- curl -o microsoft.list https://packages.microsoft.com/config/ubuntu/20.04/prod.list

- mv ./microsoft.list /etc/apt/sources.list.d/microsoft-prod.list

- curl https://packages.microsoft.com/keys/microsoft.asc | apt-key add -

- name: InstallSSM

action: ExecuteBash

inputs:

commands:

- snap install amazon-ssm-agent --classic

- systemctl start snap.amazon-ssm-agent.amazon-ssm-agent.service

- systemctl stop snap.amazon-ssm-agent.amazon-ssm-agent.service

- systemctl status snap.amazon-ssm-agent.amazon-ssm-agent.service

- systemctl start snap.amazon-ssm-agent.amazon-ssm-agent.service

- snap list amazon-ssm-agent

- name: InstallMDE

action: ExecuteBash

inputs:

commands:

- apt update

- apt install -y mdatp

EOF

aws s3 cp ./mde_comp.yaml s3://$MY_BUCKET/mdatp/mde_comp.yamlOnce uploaded, use the following commands to create an EC2IB Component with your CLI.

Note: Ensure you replace $MY_BUCKET with a name of an S3 Bucket to which you have access.

aws imagebuilder create-component \

--name InstallMDEUbuntu \

--semantic-version 1.0.0 \

--description 'Install Microsoft Defender for Endpoint without configuration' \

--change-description 'Install Microsoft Defender for Endpoint without configuration - First Version' \

--platform Linux \

--supported-os-versions Ubuntu 20 \

--uri s3://$MY_BUCKET/mdatp/mde_comp.yamlAfter your Component has been created, copy down the ARN attributed to it. When creating your EC2IB Recipe you must choose a base, just like a food recipe you must start with something to build flavors - such as sauteed onions, garlic, ginger, and tomato paste for a Chicken Tikka Masala - it is similar to EC2IB. We will have to start with a parent Image. AWS provides automated population options within the Console to pick AWS-managed instances, but you can also use the ARN of another EC2IB Image you have created or the ARN of any other AMI that to which you have access. There are some other important considerations to keep in mind when specifying a parent image:

For our purposes, we will use Ubuntu 21.10 which is an interim release before Ubuntu 22.04 LTS is released in 2022. This ensures that OS dependencies such as the versions of Bash, Python, Apt, and other components can be upgraded to their latest versions and reduce the attack surface of your EC2 Image by reducing subsequent vulnerabilities and weaknesses. Having used MDE's vulnerability assessment (as well as Wazuh and Qualys) with Ubuntu 18.04LTS and 20.04LTS images supplied by AWS, there are several hundreds of vulnerabilities present, with a decent amount exploitable - using 21.10 will eliminate a lot of these while (hopefully) maintaining compatibility with applications you built on top of those versions.

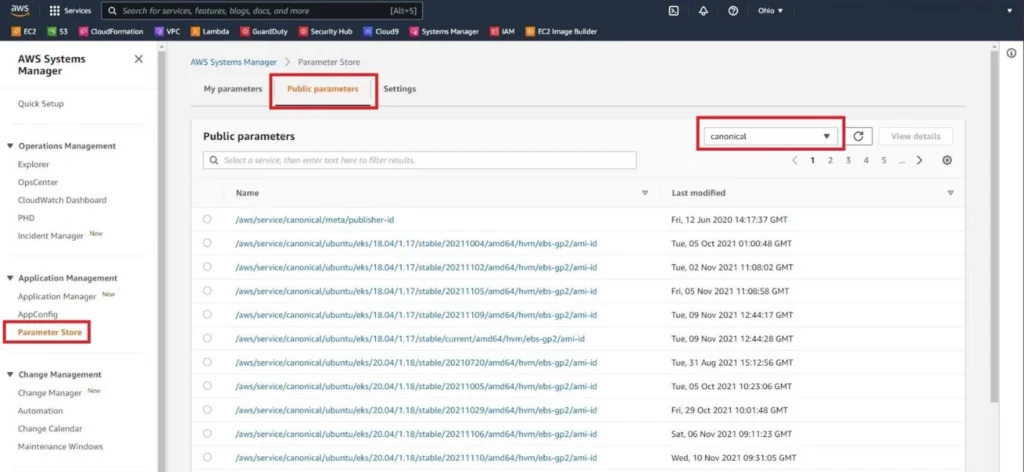

In the console, Ubuntu 21.10 is not a native option. The way to get around this is by using a Public AWS SSM Parameter. SSM Parameters are value storage objects that can contain simple strings, integers, or complex objects such as JSON documents and can be used to inject variables or store secret values such as database passwords or API keys. AWS maintains "latest" Parameter namespaces that contain the latest AMI ID for specific architectures and OS's such as the latest Amd64 EBS gp2-based image for Ubuntu 21.10 with the following parameter: /aws/service/canonical/ubuntu/server-minimal/21.10/stable/current/amd64/hvm/ebs-gp2/ami-id. AWS has similar Parameters for Amazon Linux 2, Windows, and other OS versions too which you can easily view in the AWS SSM Parameter Store console as shown below (FIG. 1).

Figure 1 - Public SSM Parameters

Note: When using a "custom" parent image (e.g., not an AWS-managed option) EC2IB will not "sync" with the upstream images for the purpose of re-build automation. You will need to manually update and re-run pipelines periodically as the latest Image updates.

We will resolve this Parameter, save it as an environment variable, and inject it into our Recipe to use as the parent Image. First, save the resolved AMI ID (ami-001844416bebc3258 at the time of this writing) as an environment variable and check it with the following commands.

Note: You may need to install jq, command provided in case it is not installed or has not been updated. You will also need to set your aws configure output to json or replace all jq references with the AWS CLI --query flag.

sudo apt install -y jq

export UBUNTU_21_AMI=$(aws ssm get-parameter --name /aws/service/canonical/ubuntu/server-minimal/21.10/stable/current/amd64/hvm/ebs-gp2/ami-id | jq --raw-output '.Parameter.Value')

echo $UBUNTU_21_AMIThe AWS SSM CLI command get-parameter will provide a raw payload such as the name, type of parameter, version number, and other details. Therefore, we will use jq to parse the specific value \ that we need. We use the --raw-output flag purposely to ensure we do not get a JSON value \ back (between quotations) for the parameter. Now we are ready to create our first Recipe with the AMI set. We will be using a "JSON skeleton" for this CLI command due to the mildly complex structure that is easier to write in JSON.

Note: Ensure you change $MY_REGION to your AWS Region and change the value for $MY_ACCOUNT to your AWS Account. You can dynamically set that Account variable with the following command: export MY_ACCOUNT=$(aws sts get-caller-identity | jq .Account --raw-output)

cat <<EOF >recipe-cli.json

{

"name": "MinimalUbuntu2110withMDE",

"description": "Recipe installs basic dependencies and Microsoft Defender for Endpoint to a Minimal Ubuntu 21.10 AMI",

"semanticVersion": "1.0.0",

"components": [

{

"componentArn": "arn:aws:imagebuilder:$MY_REGION:$MY_ACCOUNT:component/installmdeubuntu/1.0.0"

},

{

"componentArn": "arn:aws:imagebuilder:$MY_REGION:aws:component/aws-cli-version-2-linux/x.x.x"

}

],

"parentImage": "$UBUNTU_21_AMI",

"blockDeviceMappings": [

{

"deviceName": "/dev/sda1",

"ebs": {

"encrypted": false,

"deleteOnTermination": true,

"volumeSize": 24,

"volumeType": "gp2"

}

}

],

"workingDirectory": "/tmp",

"additionalInstanceConfiguration": {

"systemsManagerAgent": {

"uninstallAfterBuild": false

}

}

}

EOFThere are a few things to note with this CLI JSON skeleton. We provided AWS-managed Components, which will perform installations and configurations of the AWS CLIv2 and install Microsoft Defender for Endpoint and its required dependencies, but it will not configure it. Components are running in the order that they are provided, if you ever had cross-component dependencies, ensure they are in the right order. Additionally, we had to override the default for uninstallAfterBuild which would remove the SSM Agent. We want to keep it so we can automate patching and configuration compliance monitoring with AWS SSM State Manager and Patch Manager.

Lastly, we provide the baseline root volume EBS configuration. We can specify encryption settings and per-volume metadata such as IOPS, Throughput, Storage, and device name which will vary by the Type of EBS Volume we choose. In our case, we will not specify Encryption settings, the default settings will use an AWS-managed Key Management Service (KMS) Customer Masker Key (CMK) which is Regionally-bound and Account-unique and cannot be shared, this will prevent images from being shared. You can technically create a customer-managed KMS CMK that has a Key Policy that allows your entire AWS Organization (or specific Accounts or IAM Principals) to use the Key, but that is beyond the scope of this entry.

Note: When you reference the semantic Version of an AWS-managed Component as x.x.x it will resolve the latest Version of the Component.

To create the EC2IB Recipe, issue the following command from the directory you used to create the JSON document in the previous step.

aws imagebuilder create-image-recipe --cli-input-json file://recipe-cli.jsonEnsure you copy the ARN of your Recipe as we will need it in a future step when we create our Pipeline. The next piece we need to create is the Infrastructure Configuration which instructs EC2IB what type of EC2 instance you want to build on (e.g., m5.large or t3.small), network and security details, and most importantly, an IAM Instance Profile which gives your instance permissions to execute the Components and Tests within. In the next steps, we will create a sample Role that has the minimum necessary permissions for EC2IB to use. If you have already used EC2IB in the past, you should already have a Role create likely named "EC2InstanceProfileForImageBuilder", and you can skip the next few steps.

First, let's create a Trust Policy JSON document which will be passed to the CLI command to create an IAM Role. The Trust Policy is what is passed to the "Assume Role Document" part of an IAM Role, it "trusts" other AWS service principals, other AWS accounts, or federated identities – in our case we must trust the Amazon EC2 service principal to use this Role as part of an Instance Profile.

cat <<EOF >trust-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "ec2.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

EOFCreate the IAM Role and pass the Trust Policy JSON document to it as an argument as shown below.

aws iam create-role \

--role-name EC2IBRole \

--description 'Provides minimum necessary permissions to EC2 Image Builder for usage in Image Pipelines' \

--path '/' \

--assume-role-policy-document file://trust-policy.jsonIssue the following commands to attach the required AWS Managed Policies to your newly created IAM Role, these are required by EC2IB for EC2 AMI creation.

aws iam attach-role-policy \

--role-name EC2IBRole \

--policy-arn arn:aws:iam::aws:policy/AmazonSSMManagedInstanceCore

aws iam attach-role-policy \

--role-name EC2IBRole \

--policy-arn arn:aws:iam::aws:policy/EC2InstanceProfileForImageBuilderNow create an Instance Profile which we will assign to our previously created IAM Role. You should wait at least a second between issuing commands due to eventual consistency issues which can crop up with the IAM APIs.

aws iam create-instance-profile --instance-profile-name EC2IBInstanceProfile

sleep 1

aws iam add-role-to-instance-profile \

--instance-profile-name EC2IBInstanceProfile \

--role-name EC2IBRoleNow that the Instance Profile has been created, you will need to collect the following details and replace the placeholder variable provided in parentheses after it. A Subnet with connectivity to the internet ($MY_SUBNET) and a Security Group which at least allows HTTPS (TCP 443) traffic ($MY_SGID) are required. Ensure you keep the quotations as they're needed for the JSON document.

Note: This is an abbreviated configuration and leaves out IMDSv2 and Logging configurations, but purposely does not specific an EC2 Key Pair as any failures will cause the EC2IB infrastructure to self-destruct.

cat <<EOF >infra-config.json

{

"name": "MDEInfraConfig",

"description": "Infrastructure Configuration for MDE Installation Recipe",

"instanceTypes": [

"t3.small",

"t3.medium"

],

"instanceProfileName": "EC2IBInstanceProfile",

"securityGroupIds": [

"$MY_SGID"

],

"subnetId": "$MY_SUBNET",

"terminateInstanceOnFailure": true

}

EOFCreate the Infrastructure Configuration with the following command:

aws imagebuilder create-infrastructure-configuration --cli-input-json file://infra-config.jsonAfter creating the Infrastructure Configuration, save the ARN again, as we will need it for the Pipeline. Finally, we need to create a Distribution Configuration that will tell EC2IB which Regions to build the AMI, what to call it, and who to give access to use it. Despite the Console of EC2IB suggesting you can take advantage of the recently released (as of November 2021) ability to share AMIs with Organizations and Organizational Units (OUs), you cannot specify it within the Console nor the CLI. We will instead use the EC2 API to share the finalized AMI ID. This is not to be confused with the EC2IB Image resource itself - that can only be used within EC2IB as a Parent Image for other Recipes.

As before, we will start with a JSON CLI skeleton given the complex structure, and then issue the AWS CLI command, as shown below. Ensure you are replacing the value for $MY_REGION appropriately.

cat <<EOF >distro-config.json

{

"name": "Ubuntu2110MDEDistroConfig",

"description": "Creates an Ubuntu 21.10 Image with MDE in this Region",

"distributions": [

{

"region": "$MY_REGION",

"amiDistributionConfiguration": {

"name": "Ubuntu2110MDE-$MY_REGION-",

"description": "Ubuntu 21.10 with Microsoft Defender for Endpoint",

"amiTags": {

"CreatedBy": "$MY_ACCOUNT"

}

}

}

]

}

EOF

aws imagebuilder create-distribution-configuration --cli-input-json file://distro-config.jsonThis Distribution Configuration will only provide an Image in your current Region. It will automatically append the Region name as well as the date that the Image was built (using the EC2IB interpolated parameter) so that teams can ensure they use the latest (compared to other AMIs) Image. Like before, take down the ARN of this Distribution Configuration, we are finally ready to create our Pipeline.

aws imagebuilder create-image-pipeline \

--name Ubuntu2110MDEPipeline \

--description 'Pipeline to create Ubuntu 21.10 Image with MDE' \

--image-recipe-arn arn:aws:imagebuilder:$MY_REGION:$MY_ACCOUNT:image-recipe/minimalubuntu2110withmde/1.0.0 \

--infrastructure-configuration-arn arn:aws:imagebuilder:$MY_REGION:$MY_ACCOUNT:infrastructure-configuration/mdeinfraconfig \

--distribution-configuration-arn arn:aws:imagebuilder:$MY_REGION:$MY_ACCOUNT:distribution-configuration/ubuntu2110mdedistroconfig \

--enhanced-image-metadata-enabled \

--schedule scheduleExpression='cron(30 22 ? * tue#2 *)',timezone='UTC',pipelineExecutionStartCondition='EXPRESSION_MATCH_AND_DEPENDENCY_UPDATES_AVAILABLE' \

--status ENABLEDThe provided Schedule metadata will create a Cron job that runs on the second Tuesday of every month at 10:30 PM (22:30) UTC, with the start condition to fire only if the Cron job matches and if there are any updates found for the Components or upstream Packages. This should be communicated to teams who will consume this Image as they may have Regex rules or other automation to ensure they pull the latest monthly Image as well. We will create the Image by manually invoking the Pipeline, which may take up to 30 minutes to complete.

aws imagebuilder start-image-pipeline-execution --image-pipeline-arn arn:aws:imagebuilder:$MY_REGION:$MY_ACCOUNT:image-pipeline/ubuntu2110mdepipelineNavigate to the Image Pipelines section of the AWS EC2 Image Builder Console and select the Ubuntu2110MDEPipeline that was created. Underneath it, you should see the Output Images tab with a status message on the Image. This can take a long time depending on the infrastructure you are building on, how many Components are part of the Recipe, and the specific tests within them such as Tests and Validation. For our purposes, it should complete fast if all code examples were used as-is.

Once complete, we can now share our new "golden" Image with the rest of our AWS Organization with the EC2 API (modify-image-permissions) – this is an EC2 API that allows us to interact with the resource-based permissions of an AMI, traditionally this would be used to make an image fully public or share with specific accounts, but we can target our entire AWS Organization (or specific Organization Units) as required. This should not be confused with using AWS Resource Access Manager (RAM) to share the EC2IB Image object – that is a logical resource within EC2IB that can be used as a Parent Image for other Recipes. If we were to share the EC2IB Image (not the AMI) using RAM, all that would allow is access to the Image we baked as a source to another Recipe and not give the ability to launch a new instance.

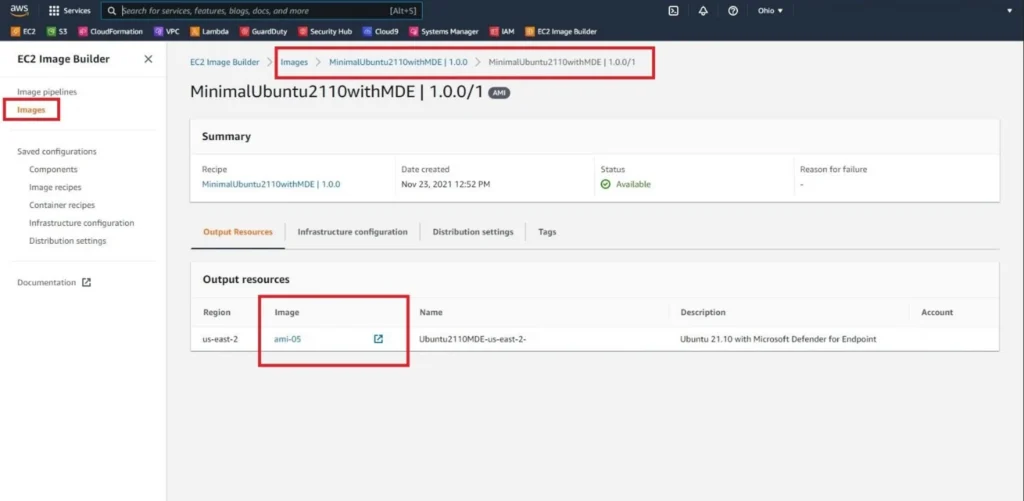

Once the status of the Image changes to Available, select the Images console from the left-hand navigation pane, select the hyperlink within the Version (should be 1.0.0/1), and copy the AMI ID under the Image column as shown below (FIG. 2).

Figure 2 - AMI ID for Images

Note: Also ensure you copy the ARN of the Image Builder Image.

We will now distribute the AMI to our entire AWS Organization within our Region. AMIs are regional resources. You will need to either modify the Distribution Configuration to build your AMI in other Regions or use the copy-image CLI command (or equivalent API/SDK method) to send the AMI to other Regions. Once there, a new AMI ID will be provided, and you'll need to create more RAM shares or use the EC2 APIs to share the AMI with other Accounts, OUs, or Organizations.

First, set an environment variable for your AWS Organizations using the describe-organization CLI command. The EC2 API to share AMIs requires the ARN which is why we are grabbing that value instead of the logical ID.

export ORG_ID=$(aws organizations describe-organization | jq '.Organization.Arn' --raw-output)

echo $ORG_IDSet an environment variable for the freshly-baked AMI ID you copied in the previous steps. Ensure you replace the portion of the example code that says YOURAMIHERE with your actual ID number.

export MDE_AMI_ID=ami-YOURAMIHERE

echo $MDE_AMI_IDShare the AMI ID with your entire AWS Organization with the following commands which use the modify-image-attribute CLI command as part of the EC2 APIs.

aws ec2 modify-image-attribute \

--attribute launchPermission \

--image-id $MDE_AMI_ID \

--operation-type add \

--no-dry-run \

--organization-arns $ORG_IDAt this point you can log into another AWS Account within your Organization to verify the AMI is shared success and in the region, you're in, it will have the same exact ID. To verify that it has been applied, you can use the following command. This will just verify that the modification to the launch permissions attributes is registered with the EC2 service.

aws ec2 describe-image-attribute \

--attribute launchPermission \

--image-id $MDE_AMI_ID \

--no-dry-runNow that we have successfully built out our MDE-enabled Ubuntu 21.10 Image and shared it with our organization, we can go ahead and finish the registration, verify it reports to the MDE Console (which will prove future feasibility of post-provisioning setup), and get ready to clean-up our deployed resources. In the next entry of this blog post, readers will be provided with a version of the CloudFormation template that is used by the Panoptica Office of the CISO to bootstrap this setup and create automation to continue to build newer versions of this Image and distribute it to your entire organization. Additionally, we will provide the ability to perform post-launch configuration and register the MDE Agent and Tag it for your organization which will allow you to keep track of per-instance compliance with EDR/XDR coverage.

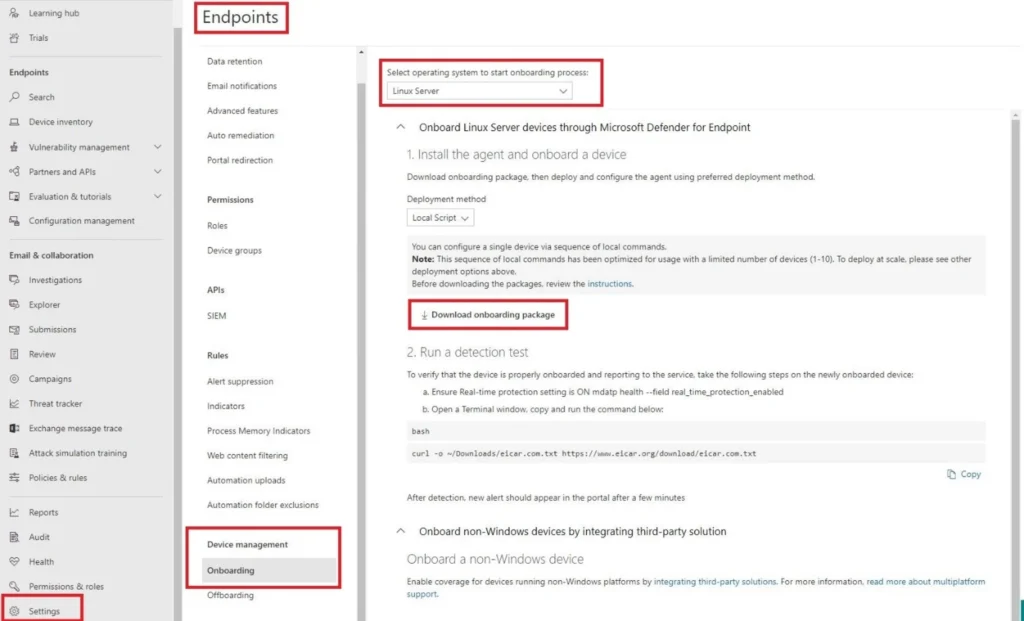

First, we will need to ensure the latest Activation script package is uploaded to our S3 Bucket. Navigate to the Microsoft 365 Defender Console and navigate to Settings in the left-hand navigation pane. Once there, select the Endpoints option and choose Onboarding with the new navigation pane for the sub-menu. Change the dropdown menu for the select operating system to start the onboarding process to Linux Server and select the Download onboarding package as shown below (FIG. 3).

Figure 3 - MDE Linux Onboarding Script

Upload the onboarding package to your S3 bucket that you also uploaded the EC2IB Component YAML file to with the following command.

aws s3 cp ./WindowsDefenderATPOnboardingPackage.zip s3://$MY_BUCKET/mdatp/WindowsDefenderATPOnboardingPackage.zipWhen we create our EC2 Instance from our MDE-enabled AMI, we will be using User Data to provide the launch script and to ensure that provisioning happens at most once (by default) when the Instance launches. However, we will be downloading that package from S3 and will thus need permissions to do so, so we need to create another Instance Profile with associated IAM Policies and Roles.

Create a new IAM Policy, this will be attached to your Role along with the AWS-managed Policy that is needed for the Systems Manager to register your Instance. This specific Policy allows EC2 to download the MDE package from your S3 directory (/mdatp).

cat <<EOF >s3-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "GetMdePackage",

"Effect": "Allow",

"Action": [

"s3:GetObjectAcl",

"s3:GetObject",

"s3:ListBucket",

"s3:GetBucketAcl",

"s3:GetBucketLocation"

],

"Resource": [

"arn:aws:s3:::$MY_BUCKET/mdatp*",

"arn:aws:s3:::$MY_BUCKET"

]

}

]

}

EOF

aws iam create-policy \

--policy-name GetObjectForMDEPackage \

--policy-document file://s3-policy.json \

--description 'Allow S3 GetObject and GetBucket permissions to retrieve MDE Onboarding Package'Now we will create and attach the rest of the Permissions required and associate them with a new IAM Instance Profile. As we have run similar commands, we won't deep dive into their purpose.

aws iam create-role \ --role-name MDEProvisionRole \

--description 'Provides minimum necessary permissions to EC2 to retrieve MDE Onboarding Packages and register with SSM' \

--path '/' \

--assume-role-policy-document file://trust-policy.json

sleep 1

aws iam attach-role-policy \

--role-name MDEProvisionRole \

--policy-arn arn:aws:iam::aws:policy/AmazonSSMManagedInstanceCore

aws iam attach-role-policy \

--role-name MDEProvisionRole \

--policy-arn arn:aws:iam::$MY_ACCOUNT:policy/GetObjectForMDEPackage

aws iam create-instance-profile --instance-profile-name MDEProvisionRoleIP

sleep 1

aws iam add-role-to-instance-profile \

--instance-profile-name MDEProvisionRoleIP \

--role-name MDEProvisionRoleNow that our new Instance Profile is ready let's launch a new EC2 Instance with our new AMI. Firstly, we must set what our User Data will be. User Data, again, will provide us a way to inject the commands we want our EC2 Instance to run as it is being launched - this may be a preferred way for developers and engineers to build their Kubernetes and Container hosts as they can tightly couple their post-provisioning with their infrastructure code and version control it from there. The cloud-init service (which provides the engine to run the User Data) can be modified to run it upon every launch if you desire as well. Luckily, the AWS CLI will handle Base64 encoding and formatting of the User Data so we do need to worry about it, we can just load from a Text File, provided that the final size of the User Data will not exceed 16 KB.

Paste the following text into a file named userdata.txt Ensure that you ONLY replace the value of $MY_BUCKET with your S3 Bucket, and not anything else, as it is not needed for the script.

#!/bin/bash

apt install --only-upgrade mdatp -y

aws s3 cp s3://$MY_BUCKET/mdatp/WindowsDefenderATPOnboardingPackage.zip .

unzip WindowsDefenderATPOnboardingPackage.zip

python3 MicrosoftDefenderATPOnboardingLinuxServer.py

mdatp threat policy set --type potentially_unwanted_application --action audit

export INSTANCE_ID=$(curl http://169.254.169.254/latest/meta-data/instance-id)

mdatp edr tag set --name GROUP --value $INSTANCE_IDImportant Note: You will need to modify the command to include a Key Pair if you do not use Session Manager to access your Instances. It has been observed that Session Manager may not even work on Ubuntu 21.10LTS despite successful installation during the EC2IB Pipeline build.

cat <<EOF >ebs-config.json

[

{

"DeviceName": "/dev/sda1",

"Ebs": {

"DeleteOnTermination": true,

"VolumeSize": 24,

"VolumeType": "gp2",

"Encrypted": false

}

}

]

EOF

aws ec2 run-instances \

--image-id $MDE_AMI_ID \

--instance-type t3.medium \

--security-group-ids $MY_SGID \

--subnet-id $MY_SUBNET \

--user-data file://userdata.txt \

--enable-api-termination \

--no-dry-run \

--iam-instance-profile Name=MDEProvisionRoleIP \

--block-device-mappings file://ebs-config.json \

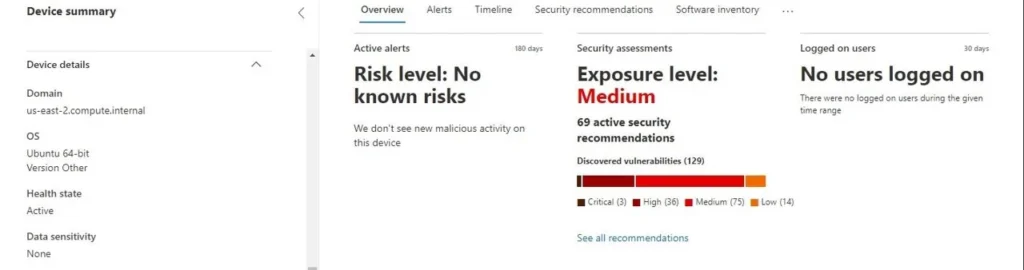

--count 1It may take up to 15 minutes for the Instance to register with the MDE Device Inventory Console, but there may not be any vulnerability data available right away. In the below screenshot (FIG. 4), an example of this exact same build is shown which has drastically lower amounts of vulnerabilities (though some still do exist) versus a standard Ubuntu 18.04LTS or 20.04LTS Image. This is important to note for DevOps or Cloud Infrastructure Security teams who are overseeing OS builds, that despite using the most up-to-date AMIs that have minimal components, there will still be baked-in vulnerabilities. You should prioritize exploitable vulnerabilities or vulnerabilities that are targeted by threat actors within your sphere of influence - whether they are cybercriminals, State or State-sponsored, or opportunistic attackers who have favored vulnerabilities to target.

Figure 4 - Ubuntu 21.10 Image in MDE Device Inventory

Before closing, we will clean up all the resources and services we had deployed, if you want to keep what you built feel free to skip these next few steps. Unfortunately, you cannot Offboard the instance via automation, as it is an unsupported OS version, you will need to wait for the instance to deregister from Device Inventory or locally install and run the offboarding script provided from your Microsoft 365 Defender console. However, to remove all other components, run the following commands.

aws ec2 terminate-instances --instance-ids $MDE_INSTANCE_ID

aws imagebuilder delete-image --image-build-version-arn arn:aws:imagebuilder:$MY_REGION:$MY_ACCOUNT:image/minimalubuntu2110withmde/1.0.0/1

aws imagebuilder delete-image-pipeline --image-pipeline-arn arn:aws:imagebuilder:$MY_REGION:$MY_ACCOUNT:image-pipeline/ubuntu2110mdepipeline

aws imagebuilder delete-image-recipe --image-recipe-arn arn:aws:imagebuilder:$MY_REGION:$MY_ACCOUNT:image-recipe/minimalubuntu2110withmde/1.0.0

aws imagebuilder delete-component --component-build-version-arn arn:aws:imagebuilder:$MY_REGION:$MY_ACCOUNT:component/installmdeubuntu/1.0.0/1

aws imagebuilder delete-distribution-configuration --distribution-configuration-arn arn:aws:imagebuilder:$MY_REGION:$MY_ACCOUNT:distribution-configuration/ubuntu2110mdedistroconfig

aws imagebuilder delete-infrastructure-configuration --infrastructure-configuration-arn arn:aws:imagebuilder:$MY_REGION:$MY_ACCOUNT:infrastructure-configuration/mdeinfraconfig

aws iam remove-role-from-instance-profile --instance-profile-name EC2IBInstanceProfile --role-name EC2IBRole

aws iam delete-instance-profile --instance-profile-name EC2IBInstanceProfile

aws iam detach-role-policy --role-name EC2IBRole --policy-arn arn:aws:iam::aws:policy/AmazonSSMManagedInstanceCore

aws iam detach-role-policy --role-name EC2IBRole --policy-arn arn:aws:iam::aws:policy/EC2InstanceProfileForImageBuilder

aws iam delete-role --role-name EC2IBRole

aws iam remove-role-from-instance-profile --instance-profile-name MDEProvisionRoleIP --role-name MDEProvisionRole

aws iam delete-instance-profile --instance-profile-name MDEProvisionRoleIP

aws iam detach-role-policy --role-name MDEProvisionRole --policy-arn arn:aws:iam::aws:policy/AmazonSSMManagedInstanceCore

aws iam detach-role-policy --role-name MDEProvisionRole --policy-arn arn:aws:iam::$MY_ACCOUNT:policy/GetObjectForMDEPackage

aws iam delete-role --role-name MDEProvisionRole

aws iam delete-policy --policy-arn arn:aws:iam::$MY_ACCOUNT:policy/GetObjectForMDEPackage

aws ec2 deregister-image --image-id $MDE_AMI_ID --no-dry-runIn this blog, we demonstrated how to use AWS EC2 Image Builder to create a brand new AMI that had the required dependencies for running Microsoft Defender for Endpoint and the AWS Systems Manager Agent. We dove into the various parts of the EC2 Image Builder and built them using the AWS CLI as well as set up the required AWS IAM Policies, Roles, and Instance Profiles to grant permissions to the various services. We were able to share our new AMI with our entire AWS Organization, which is the fastest way to distribute an AMI to be used. Finally, we used our fresh baked AMI to launch a new EC2 Instance and finalized the configuration of Microsoft Defender for Endpoint on our Ubuntu 21.10 Minimal EC2 Instance, and did a light analysis of the (relative) lack of vulnerabilities.

Using EC2 Image Builder and User Data within your Infrastructure-as-Code or infrastructure-built scripts is a great way to get started with a secure baseline upon which you can build your applications, run your containers or Kubernetes clusters. By choosing a "Minimal" image with the latest flavor of your preferred operating system, you can get ahead of the vulnerability and patch management curve by starting with the least number of vulnerabilities and thus drastically reducing the attack surface for internet-borne attacks. In a production environment, you should strongly consider additional hardening techniques such as using CIS or DISA STIG guidelines, further removing unnecessary components, and exploring mobility onto another OS such as Amazon Linux 2 or Amazon Linux 2022 to further reduce vulnerability "debt." This is also not a substitute for other strong cloud security measures such as implementing network and identity security products, performing threat-centric evaluations such as Breach & Attack Simulations, or looking into a platform like Panoptica to help you identify legitimate attack paths and security misconfigurations from your codebase to your workload runtimes.

Stay Dangerous.